Applied Radiology Focus: Understanding multimodal fusion imaging

Images

Medical imaging technology coupled with computer animation techniques has provided a virtual look into the human body that rivals the best cinematic production. Holography and molecular imaging combine to take us on an incredible journey to witness the innermost workings of our body organs. Now, recent advances in hardware and software imaging technology bring another dimension-multimodal fusion -to this medical incarnation.

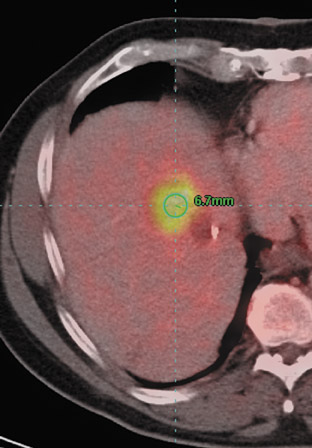

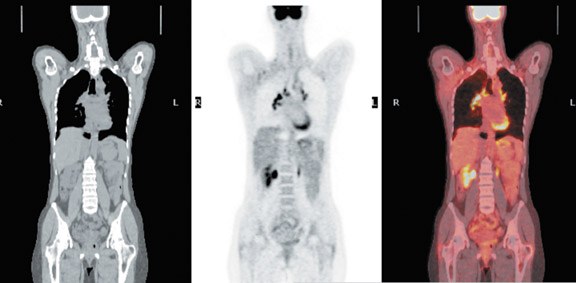

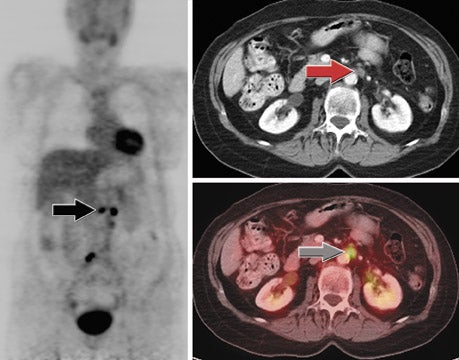

To be of the utmost diagnostic value, medical images must provide two very important and interrelated pieces of information to clinical physicians: exactly what is going on precisely where in the body. Anatomic imaging technologies like magnetic resonance imaging (MRI) and computed tomography (CT) clearly show morphologic features, such as size and shape, but not information on proliferation or inflammation. Is that suspicious mass in the left breast a malignant tumor or just fibrosis? Functional imaging technologies, such as positron emission tomo-graphy (PET) or single-photon emission computed tomography (SPECT), use radiolabeled glucose or monoclonal antibodies to provide critical information on cellular activity, but cannot provide the anatomic detail needed for precise localization. Is that metastatic hot spot in the muscle or in the nearby bone? Physicians need both anatomic and functional data to make the definitive diagnosis that is so important to the patient.

Bringing together anatomic and functional information with sensitivity and specificity is the true value of multimodal fusion imaging.

Technology of fusion imaging

How it works

Traditionally, separate images obtained from the same patient at different times and with different imaging modalities were simply compared by radiologists visually, side by side. This method is prone to errors because of the difficulty of mentally superimposing complex image data. Manual superimposition of the images, however, is also prone to error because of the inherent difficulty of accurately aligning two images of a dynamic, living being. Gross changes in patient position or subtle changes in the position of internal organs, such as the bladder or diaphragm, can make alignment difficult even for two consecutive images from the same modality.

Accurate alignment of two images requires both congruent matching of image coordinates and simultaneous matching of the voxel size. Tomographic images can be registered by using internal or external landmarks, called fiducials. Internal fiducials (an anatomic part, such as a kidney or chest wall) are subject to the variable physiologic state of the patient and may not be stable enough to provide a reference throughout treatment. External fiducials are specifically constructed with unique geometries to provide each two-dimensional (2D) pixel cross-section with a unique, stable coordinate system that can be identified in different image modalities. This allows construction of a fully registered volumetric dataset wherein each voxel has unique coordinates and opacity information that will allow translation and rotation of the target and object images. 1 Some alignment using fiducials is semiautomatic; however, intervention by the radiologist is usually needed to visually align some elements.

Hybrid hardware systems are now available that combine CT and PET scanners to eliminate the problems of disparate temporal image acquisition and minimize spacial artifacts due to patient repositioning. Although these systems can be expensive to purchase, they bring the advantage of requiring only one examination for the patient and can reduce scheduling time for the hospital or clinic. However, distortion can occur even with simultaneous acquisition because of differences in imaging protocols. For example, acquisition for three-dimensional (3D) CT data occurs during a single breath-hold, while PET data are obtained during a longer period of quiet breathing. Because of these inherent differences in the acquisition speed, accurate fusion of these images must incorporate some software transformation of data, generally with the use of rigid body algorithms. Software image registration systems are also necessary for retrospective fusion of serial studies to assess response to therapy.

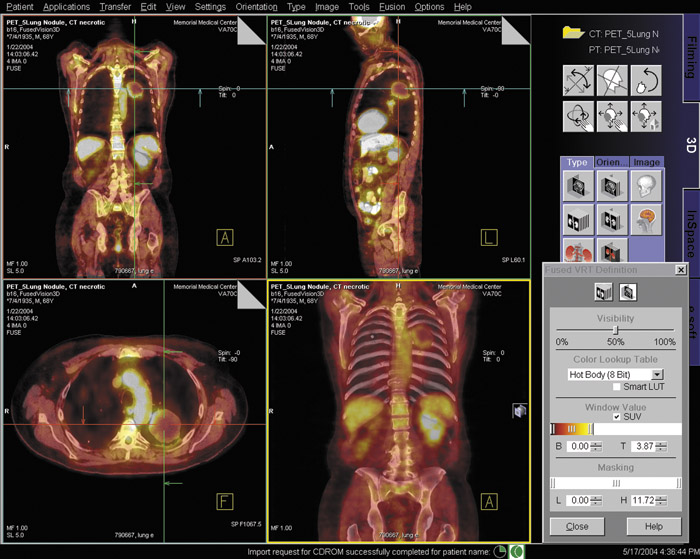

Recent advances in software modeling techniques have produced complex nonrigid, or deformable, algorithms that perform sophisticated image registration for fusion imaging. This software can be installed on a workstation importing Digital Imaging and Communications in Medicine (DICOM)-compliant data from PET, SPECT, CT, or MRI or can be used in conjunction with a hybrid hardware scanning system to fuse the images into a single, aligned dataset that displays all the clinical information from multiple modalities. Deformable techniques first align areas of localized densities on both images and then deform, or "warp" the functional (eg, PET) data to fit the shape of the anatomic structural (eg, CT) image. Working with these algorithms, radiologists can fuse any images, such as PET and MRI, or ultrasound and CT, which would be difficult to physically combine into a hybrid system. Fusion of three or more images is possible through such software manipulations. A fully automated 3D registration system has been developed that can be applied to retrospectively fuse thoracic images from standalone PET and CT scanners or serial image data from hybrid PET/CT scanners, effectively overcoming difficulties with nonlinear deformation during breath-hold CT imaging. 2

Display of information

Multimodal fusion data must be visually delivered in a means that is easy for the physician to interpret. Display of anatomic CTor MRI data is usually in grayscale while PET and SPECT scanners use color to indicate functional information. Fusion images usually display a blend of new colors calculated to show combined intensities while simulating a transparency effect. Because of possible data loss in certain monitors or artifical increases in perceived intensities occurring during color blending, side-by-side viewing of the originals is recommended when interpreting fusion data. 3 An alternate display method uses a smaller image window that opens interactively to reveal data from the other modality.

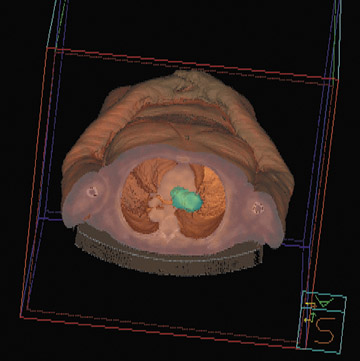

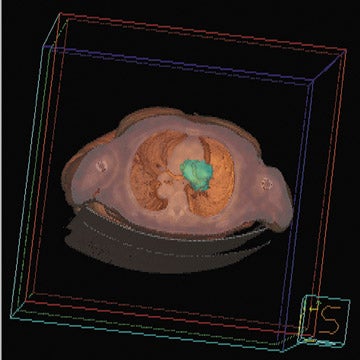

These methods are limited in their display of volumetric data to slice-by-slice viewing on a 2D computer screen or series of photographs. The images thus formed do not have true depth cues. This display requires the physician to mentally superimpose and compare image information for each slice viewed. While this method is appropriate for assessing the intricate details of certain pathologic conditions, it is not helpful for spatially demanding tasks, such as image-guided surgery. If depth clues (such as linear or aerial perspectives, shadows, and texture gradients) are added to a 2D image, the result is much more realistic and is called a 2.5-dimensional representation (2.5D). However, 2.5D images are based on fixed planar reconstructions and must be redrawn for each new perspective, which requires vast amounts of computational power. This is the method commonly used in computer animation games and virtual reality simulations.

A true 3D medical image display provides depth clues and displays accurate anatomic spacial relationships, which can be very helpful in planning complex surgeries or invasive treatments. Both stereography and holography can be used to generate and display a 3D image. Stereography gives each eye a slightly different 2D view of the image through the use of auxiliary devices, such as polarized glasses, to produce a static 3D effect. Stereograms display a fixed image with no parallax (the apparent displacement of objects caused by change in position of the observer) and thus provide no capability to view around an object. Holography uses split beams of coherent light to register 3D information about an object that is recorded as a special type of interferogram on photosensitive film. The information is retrieved by illuminating the film with a reference light beam, creating an image that exists in 3D space independent of the observer. Holograms can have horizontal and vertical parallax. The advantage of this method is that it requires no computational power to provide variable viewing perspectives (see sidebar, Holofusion ).

Overview of image modality combinations

The combination of various imaging modalities creates a powerful diagnostic and therapeutic tool that can be more useful than any single method. For example, the organ segmentation made possible by CT can enhance discrimination of subtle activity peaks shown by PET. Thus, the two modalities combine synergistically to help radiation oncologists plan and monitor cancer treatment. PET can also provide information on the extent and quality of blood perfusion into the cardiac muscle, while CT can delineate the course and internal structure of the arteries to the heart. This information can help cardiologists assess the presence and extent of coronary artery disease.

Fusion duos

Another application for PET/CT fusion is localization of residual disease in patients with thyroid cancer. 4 It can be particularly difficult to identify metastatic foci in this area because of a lack of anatomic landmarks. Paul W. Ladenson, MD, Director of the Division of Endo-crinology Department of Medicine at Johns Hopkins Medical Institutions, Baltimore, MD, uses PET/CT fusion for long-term monitoring of his patients and notes that PET imaging has a specificity of 90% in patients with thyroid carcinoma. According to Dr. Ladenson, PET/CT fusion is used in perhaps 20% of patients with elevated levels of thyroglobulin in whom cervical sonography failed to adequately localize the site of metastasis.

The fusion of PET and CT images may be the most common, but it is not the only useful combination. SPECT and CT fusion images were used by Yamamoto and co-workers 5 to improve the localization of radiolabeled iodine uptake for management of patients with differentiated thyroid carcinoma. SPECT and CT images taken separately of 17 patients were fused by using a computer workstation. There was agreement between the two separate imaging modalities in only 29% of the patients. Fused images were considered to be of clinical benefit in 15 of 17 patients (88%). Fused images showed abnormal findings in normal-sized lymph nodes in 4 patients; 5 occult bone metastases and 1 muscle metastasis in 6 patients; abnormal findings in 2 patients with normal CT scans; and areas of abnormal iodine uptake were localized precisely in 3 patients with bone metastases.

SPECT images can be simultaneously acquired with CT images in a technology known as combined transmission and emission tomography (TET). The clini-cal value of TET was explored by Even-Sapir and coworkers 6 for evaluation of endocrine tumors. They concluded that TET improved image interpretation in 11 patients (44%) with abnormal findings, and provided additional information of clinical value, such as planning of surgery, changing of treatment approach, or alteration of prognosis, in 9 patients (33%).

MRI and CT fused images were used by Liu and colleagues 7 to guide implantation of deep brain electrodes in patients with Parkinson's disease. T2-weighted MR images were fused with a stereotactic CT scan to locate the subthalamic nucleus, a possible target of electrical stimulation for symptom suppression. The lens of the eye and the pineal gland were used as internal fiducials. Of 7 patients under-going bilateral implantation of electrodes with this technique, 3 had an excellent clinical outcome, 2 were significantly improved, and 2 were unchanged. There were no complications.

Contrast-enhanced MRI has been fused with conventional X-ray mammography by using a combination of pharmacokinetic modelling, projection geometry, and a flexible, wavelet-based feature extraction technique with thin-plate-spline, nonrigid warping. 8 The result is a robust fusion framework that combines high-resolution structural features, such as calcifications and fine spiculations, with functional information describing the pharmacokinetic interaction between the contrast agent and tumor vascularity.

Slomka and colleagues 9 reported on results of using an automated, retrospective registration algorithm for the fusion of 3D power Doppler ultrasound images and MR angiography of the carotid arteries without the use of fiducials. The method relies on an iterative search of mutual information to find the best rigid-body transformation after thresholding volume data to reduce uncorrelated noise. In this study, the algorithm correctly registered 107 of 110 fusion images, with an average registration time of 4 minutes.

Fusion triplets

Three or more imaging modalities can be fused to achieve optimum clinical outcomes for the patient. In a study of radiotherapy planning for surgical treatment of oropharyngeal and nasopharyngeal carcinomas, MR and CT images were fused with PET images by means of an automatic multimodality image registration system that used the brain as an internal fiducial. 10 Fused images were useful in determining gross tumor volume and clinical target volume. Identification of tumor-free areas of the upper neck was performed more easily, and sparing of the parotid tissue was possible in 71% of the patients.

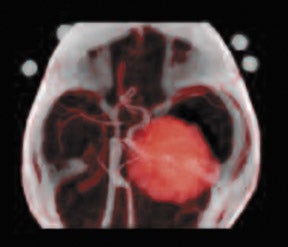

A new approach for 3D cardiac imaging fuses images from PET, MRI, and magnetocardiography (MCG). 11 A model-based registration system is used to align MR and PET images, and a marker-based system is used to align the MR and MCG images. The result is an easy-to-interpret, individualized, 3D, biventricular model of the heart that includes functional information.

Holofusion

In what may be the most futuristic imaging display method yet, CT, PET, MRI, and other volumetric data can be transformed into a life-sized, radiologically accurate, 3D holographic fusion image with preservation of the critical relationships between and within anatomic, physiologic, and pathologic features. The fusion hologram is made by illuminating a series of cross-sectional 2D fusion images with a split beam of coherent light, as in construction of physical object holograms, but a single piece of photographic film is exposed multiple times for each slice. This patented method (Digital Holography technology; Voxel, Inc., Provo, UT) records the XY axis contrast-detail of each slice, as well as the Z-axis distance that separates each slice from the film and from every other slice. Illumination of this film with a special portable viewer (the Voxbox display), produces a life-sized "anatomical twin" of the patient's anatomy projected in space. Physicians may actually place instruments into this hologram to trial-fit a prosthesis or plan a surgical approach. In a newer fusion technique, also by Voxel, the original diagnostic images may first be made into separate holograms, and then the individual holograms are fused using an accurate 3D registration system.

Integration into the healthcare industry

It is clear that multimodal fusion imaging provides more accurate information about patient anatomy and physiology and, thus, should result in better clinical outcomes and patient care. Appropriately, this new technology is changing diagnostic and therapeutic practice. 12 PET/CT scanners are now so popular that stand-alone PET equipment is considered by some as a second-class system.

The high specificity and sensitivity of PET/CT fusion has resulted in dramatic benefit for some patients. Dr. Ladenson remembers a case of a 37-year-old man scheduled for a neck dissection for thyroid cancer in whom the preoperative PET/CT scan showed previously undetected metastasis in the mediastinum. The surgical plan was altered and the residual cancerous tissue was resected.

Fortunately, medical insurance providers have begun to recognize the value of fusion imaging. Dr. Ladenson noted that a significant hurdle was recently overcome when the Centers for Medicare and Medicaid agreed to reimburse expenses for PET scans in patients with thyroid cancer whose thyroglobulin levels were >10 ng/mL.

Some hurdles remain, however, in the effort to bring multimodal fusion imaging into routine use. According to Piotr Slomka, PhD, one of the early developers of fusion technology (who is now a Research Scientist at the Department of Imaging/AIM Program, Cedars-Sinai Medical Center, Los Angeles, CA), it is difficult to perform many fusions in practice because this usually requires coordination with different hospital departments and transfer of image data from different workstations. One shortcoming is the lack of integration at some institutions of the picture archiving and communications system (PACS), the hospital information system (HIS), and the fusion workstations. Often, patients may have previous scans that would provide useful information on progression of disease or response to treatment. "An intelligent patient management system would search the PACS for all scans of a particular patient and pull those images automatically into the workstation," Dr. Slomka noted. This will become increasingly important as software advances allow fusion of different imaging modalities.

Dr. Slomka also remarked that data such as the calculated standardized up-take value (SUV) may be lost when PET images are stored in some PACS. The SUV, which is high in malignant tissues and low in benign tumors, can provide important diagnostic information that is not available in fused images currently retrieved from such PACS. He thought that the next generation of a PACS should be an integrated system that could store and retrieve all image data, link to workstations, and possibly even perform software-based fusion.

If images could be prealigned by the software automatically, the fusion process could be greatly simplified at the workstation. Currently the positioning of the patient, even for specific diagnostic scans, is not standardized, so that, for example, a long-axis view of the heart is not automatically recognizable to the fusion software. "What is needed is a global body positioning system," said Dr. Slomka, "that would allow the software to tilt the image to make it easier to begin the exact voxel-by-voxel matching for registration." Dr. Slomka hopes that imaging equipment vendors or, perhaps, the National Electrical Manufacturers Association (NEMA) will drive an effort to institute such standards. These standards would also facilitate sharing of medical images between workstations within a hospital and between institutions worldwide.

Conclusion

Fusion technology melds functional and anatomic information and holds great promise for diagnostic imaging and improved patient care.

Ultimately, it should be possible to fuse many different imaging modalities into a 3D visual dataset of the patient that can exploit the best features of each imaging technology. Fused images can be used to plan surgical procedures, guide invasive or noninvasive therapeutic interventions, and monitor individual response to therapy. As the medical community adapts to acquire, store, and transfer fusion data efficiently, this technology will become more widely available and perhaps even commonplace, to the great benefit of patients everywhere.

Related Articles

Citation

Applied Radiology Focus: Understanding multimodal fusion imaging. Appl Radiol.

June 4, 2004