Using informatics to improve the quality of radiology

Images

Dr. Nagy is an Associate Professor, Director of Quality and Informatics Research, Department of Radiology, University of Maryland School of Medicine, Baltimore, MD.

One of the roles of an informatics architect is to provide an infrastructure that enables radiologists to read images immediately wherever they are. Such rapid access to digital imaging reduces delays in interpretation, speeds report turnaround time, and hastens clinical decision-making, all of which clearly improve efficiency.

Over the years, however, it has become equally clear that an accelerated work pace, a focus on productivity, and the use of distance medicine can sterilize work relationships. When a technologist no longer comes into the reading room to hang films, something gets lost in the relationship between radiologist and technologist. When referring physicians no longer engage the radiologist in consultations, something gets lost in that relationship too. These changes threaten to compromise quality.

In our desire to leverage information technology (IT) to be as productive as possible, we have threatened our work relationships. This doesn’t need to be so. Essentially, IT was born to communicate, whether by voicemail, e-mail, text messaging, instant messaging, or paging. We can do a better job of using some of these communication vehicles to create a culture of quality within radiology-to make it easier to do the right thing while being as productive as possible. This article will discuss 3 tools we have developed at the University of Maryland to enhance quality through informatics.

Quality-control reporting

The first challenge many radiology practices face when they “go digital” is incorporating the quality-control practices that were used in the film environment. In the past, radiologists could note on the film if the images were poorly collimated or were substandard in some other way. In an electronic environment, there is little feedback between radiologists and technologists.

The result can be a downward spiral in quality. Often, radiologists submit quality-control reports using the same paper-based forms they were using years ago. The reports go to modality supervisors, who discuss them with the technologists. But the radiologists typically don't receive any feedback on actions taken and don't observe any improvement. As a result, it is difficult for radiologists to see the value in submitting future quality-control reports.

Once radiologists become apathetic about reporting quality issues, many things can go awry. If technologists don’t get feedback on the quality of their work, they are likely to either think they’re doing a great job or that radiologists in their institution don’t care about image quality. Radiology supervisors may know that radiologists are unhappy, but they have no data to use in taking action. The result is a disappointing stalemate.

Information technology systems must be able to handle communications feedback to ensure quality processes. The key ingredients for change at the University of Maryland were a picture archiving and communications system (PACS) and a simple Web-based issue tracking tool that enables radiologists to submit quality-control issues, assigns issues to owners, and notifies users when the issue has been resolved. We also supplied our technologists and modality supervisors with digital pagers. When a radiologist reports a quality issue, the system pages the technologist and modality supervisor immediately.

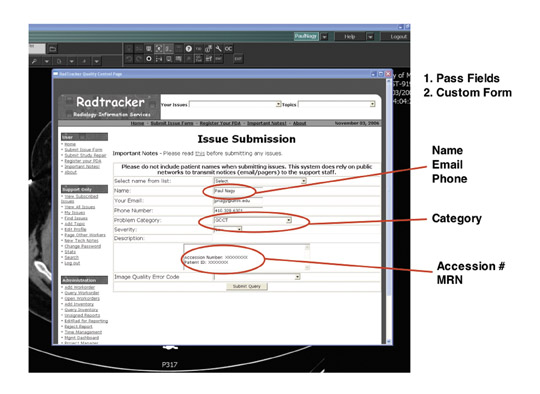

To encourage radiologists to report quality issues, it is important to remove as many barriers as possible and to make reporting simple. With this in mind, we synchronized quality control with our clinical workflow by adding a button to our PACS that launches a Web-based quality-control tool called Radtracker 1 (Figure 1).

The issue-submission Web page provides the user name, the study session number, the patient medical record number, and the modality. Within a single pull-down menu, the radiologist can select what is wrong with the images-poor patient positioning, for example-and can add comments. When the radiologist clicks on “submit,” the modality supervisor and technologist receive a text message about the issue and how to correct it. The technologist then resolves the issue, and the radiologist receives an e-mail about actions taken.

Using this system, we have gone from approximately 5 to 10 paper-based quality-control reports per month in 2006 to 300 per month today. This does not reflect deterioration in quality; in fact, only roughly 1% of our annual volume of studies has a quality-control issue. Instead, better quality-control reporting has enabled us to focus on the root cause of quality-control issues and to track how quickly we respond to these issues. In approximately 40% of cases, we resolve the issue within an hour.

We have also uncovered new types of quality issues, beyond those related to image acquisition. Data quality issues can affect the radiologist’s workflow. For example, if the technologist doesn’t sign off and complete a study in time, the radiologist might not be able to finalize it. Using this process, we’re better able to understand problem areas in the department.

We have used the quality-control data to create knowledge bases that we can click through and explore. When we do in-service training, we use the knowledge bases to find various types of cases. Using URL-based integration, we can even launch the PACS system simply by clicking on a case file.

Every few months, a radiologist, technologist, modality supervisor, and physicist meet for an hour to work through all of the quality-control issues in a given imaging section. This offers radiologists an ideal opportunity to lead a discussion on how quality-control issues arise. In the past, our modality supervisors were very good at fixing problems on a day-to-day basis but didn’t necessarily understand the magnitude of the issue. Now, when they see that a problem is occurring many times a year, they realize it’s worth the effort to determine why the problem is happening and how to remove the root causes.

We also use this system for generating report cards (Figure 2). These report cards enable our technologists to see how well they’re doing, how many quality reports they’re getting from radiologists over a period of time, and how they compare with other technologists. We have found that most technologists are very responsive. Once they see the data, they try to understand how they can do a better job. This is a very powerful tool for creating a culture of quality.

For radiologists, this system provides a mechanism to report quality issues and removes any reason for being apathetic. We now have data-driven discussions with the radiologists to try to understand the root causes of quality issues. The radiologists feel that the technologists are working with them, that we are a team, and that we have a good feedback mechanism and good communication.

Technologist peer review

The second quality-control tool that we have implemented, technologist peer review, also harkens back to the days of film. Acquiring images has always been an art that requires training and feedback to perfect. In the past, as senior film technologists processed films, they would review images and take junior technologists to task for quality problems. Through peer pressure, junior technologists would be motivated to improve their performance.

Peer pressure is an enormous motivator that we don’t use well enough in healthcare to improve performance. At our institution, we use informatics as a tool for applying peer pressure. We no longer have the luxury of doing in-line quality control while processing film. Radiologists need to read images right away and report them immediately. However, we can do retrospective quality control.

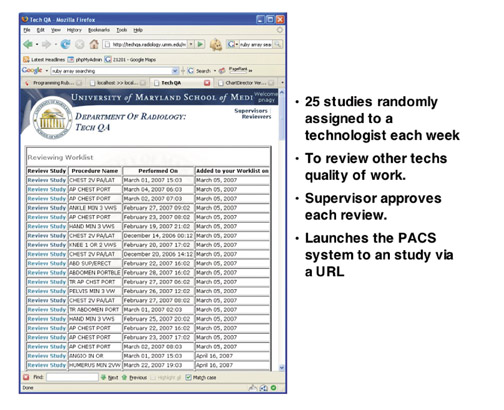

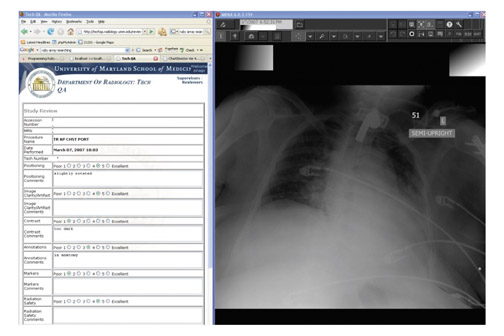

To achieve this goal, we have built a fully automated Web-based Tech Quality Assurance (QA) tool that captures all the studies done by a section, then randomly assigns approximately 5% of them to a volunteer to review (Figure 3). Reviewing technologists are given a worklist with the procedure names and dates. Because of synchronization between information systems, they can launch the study in the PACS system simply by clicking on the integrated URL.

Once the study has been launched, the technologist reviewer rates it on a scale of 1 to 5, with 1 being poor and 5 being excellent. The ratings cover patient positioning, image clarity/artifacts, contrast, annotations, markers, and radiation safety. The reviews are then approved or disapproved by a modality supervisor. This step enables us to train our volunteers to become better reviewers.

We have used this technique to review >5000 studies so far. We have found that we're doing well on contrast, data quality, and annotations, but have room for improvement in markers, positioning, and radiation safety (mostly collimation).

We can also use the Tech QA tool in preparing individual technologist report cards. This is a way of giving very tailored feedback on how to improve their processes. We can also use this tool as a knowledge base to identify the best and worst studies in each section, so that technologists can learn by both doing and seeing.

Communication

Communication between radiologists and referring physicians plays another important role in quality. Take the case of a critical finding that warrants rapid communication. The Joint Commission on the Accreditation of Healthcare Organizations (JCAHO) requires that radiologists document not only that a critical finding has been delivered but also how long it took to deliver the finding.

Delivery of critical findings can be especially challenging in a large in-patient medical center. At the University of Maryland, we have roughly 1100 attending physicians and another 900 residents. Often the physician who orders a study is not the right person to take delivery of the critical finding. This is a source of frustration for radiologists.

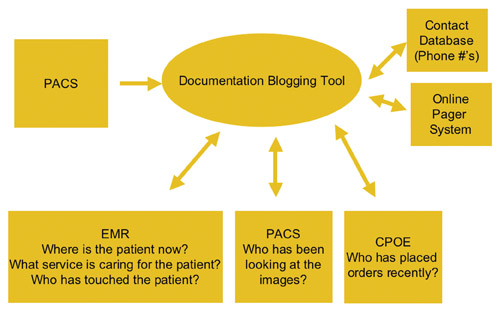

To help in identifying the right person to receive information to critical situations, we have developed data mining tools that identify who is involved in patient care (Figure 4). This tool can perform a real-time query of the electronic medical record to determine where the patient is located and which service team is caring for the patient. Often, it is more important to identify the appropriate service team than to identify the individual physician.

We can also use the critical alert tool to determine who has been in contact with the patient in the last 24 hours. Knowing which physicians and nurses are giving care to the patient offers an important clue in determining to whom to deliver critical information. We can also look at the PACS to see who has been looking at the images and the computer-based order entry system to see who has been placing physician orders for the patient.

When the radiologists launch the critical alert tool from our PACS, they are presented with all of the patient information and names of clinicians who have been involved in patient care, along with information on how old the contact data are. Once we have all this information, we mine a centralized physician contact database with phone numbers and an integrated online paging system. The radiologist simply clicks on the “contact” button next to each name.

In addition, we have built a blogging tool that can be launched from the PACS and documents all the radiologist’s efforts to communicate critical findings, as well as to document that it was successfully delivered, to whom, and when.

Healthcare IT systems are a gold mine of information. If we can provide some of that information in a relevant format to radiologists, it can help them to make decisions and to communicate information quickly in a critical environment.

Conclusion

Communication plays a vital role in how we deliver radiology services and in the quality of those services. Information technology is immensely qualified to deliver tools that improve quality and communications. The time has come for radiologists to insist that vendors provide these tools. PACS stands for picture archiving and communication system. It’s time to put the communication back in PACS.